Inchoate Thoughts on New Programming Abstractions for the Cloud

I watched an InfoQ video of Barbara Liskov at OOPSLA this evening. At the end of her talk in a look at the challenges looking forward she said, “I’ve been waiting for thirty years for a new abstraction mechanism,” and, “There’s a funny disconnect in how we write distributed programs. You write your individual modules, but then when you want to connect them together you’re out of the programming language, and sort of into this other world. Maybe we need languages that are a little more complete now so that we can write the whole thing in the language.” Her talk was an interesting journey through the history of Computer Science through the lens of her work, but this notion connected with something I’ve been thinking about lately.

Earlier this month I was catching up on the happenings on Channel9 and stumbled on this conversation between Erik Meijer and Dave Thomas. I would have preferred a different interview-style, but Dave Thomas’ opinions are always interesting. “I find it hard to believe that people would build a business application in Java or C#. […] The real opportunity is recognizing that there is really no need for people to do all of the stuff they have to do today. And, I really believe that is fundamentally a language problem, whether that’s through an extended SQL, or its a new functional language, whether its a vector functional language; I can see lots of possibilities there. […] I see a future where there are objects in the modeling layer, but not in the execution infrastructure.”

I see a connection between Liskov’s desire “new abstraction mechanism” and Thomas’ “language problem”. If you look at the history of “execution infrastructure”, there has been an unremitting trend toward greater and greater abstraction of the hardware that makes it all happen. From twiddling bits, to manipulating registers, to compiled languages, to compiled to virtual machine bytecode and attendant technologies like garbage collection, interpreters, to very declarative constructs, we continually move away from the hardware to focus on the actual problems that we began writing the program to solve in the first place(1). Both Liskov and Thomas are bothered by the expressivity of languages; that is, programming languages are still burdened with the legacy of the material world.

I think this may very well be the point of “Oslo” and the “M” meta-language. One might view that effort as a concession that even DSLs are too far burdened by their host language’s legacy to effectively move past the machine programming era into the solution modeling era. So, rather than create a language with which to write solution code, create a language/runtime to write such languages. This is really just the logical next step, isn’t it?

I didn’t quite understand this viewpoint at the time, but I had this discussion to some extent with Shy Cohen at TechEd09. I just didn’t grasp the gestalt of “Oslo”. This certainly wasn’t a failing of his explanatory powers nor—hopefully—my ability to understand. Rather I think it’s because they keep demoing it as one more data access technology. The main demo being to develop an M grammar of some simple type and have a database created. Again, the legacy of computing strikes again. To make the demo relevant, they have to show you how it works with all your existing infrastructure.

So, maybe the solution to developing new abstractions is to once and for all abstract away the rest of the infrastructure. Both Liskov and Thomas were making this point. Why should we care how and where the data is stored? Why should most developers care about concurrency? Why should we have to understand the behemoth that is WCF in order to get to modules/systems/components talking to each other?

Let’s start over with an abstraction of the entirety of computing infrastructure(2): servers, networking, disks, backups, databases, etc.. We’ll call it, oh, I don’t know… a cloud. Now, what properties must a cloud exhibit in order to ensure this abstraction doesn’t leak? I suggest we can examine why existing abstractions in computing have been successful, understand the problems they solve at a fundamental level, and then ensure that our cloud can do the same. Some candidates for this examination are: relational databases, virtual machines/garbage collectors, REST, Ruby on Rails, and LINQ. I’m sure there are countless other effective abstractions that could serve as guideposts.

Should we not be able to express things naturally in a solution modeling language? Why can’t I write, “When Company X sends us a shipment notification for the next Widget shipment, make sure that all Whatzit production is expedited. And, let me know immediately if Whatzit production capacity is insufficient to meet our production goals for the month.” Sure, this is a bit imperative, but it’s imperatively modeling a solution, not instructions on how to push bits around to satisfy the solution model; in that sense it is very, very declarative. I believe the cloud abstraction enables this kind of solution modeling language.

How about a cloud as the ambient monad for all programs? What about a Google Wave bot as a sort of REPL loop for this solution modeling language? What about extensive use of the Maybe monad or amb style evaluation in a constraint driven lexicon so that imperative rules like those in the sample above are evaluated in a reactive rather than deterministic fashion?

- Quantum computing productively remains undecided as to “how” it actually works. That is, “how” is somewhat dependent on your interpretation of quantum mechanics. Maybe an infinite number of calculation occur simultaneously in infinite universes with the results summed over via quantum mechanical effects.

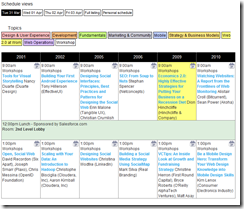

- I should say here that I think the Salesforce.com model is pretty far down this path, as is Windows Azure to a lesser extent. Crucially, neither of these platforms mitigate the overhead of persistence and communications constructs.